Automated AWS Elastic IP monitoring with Shodan

Abstract:

AWS is the global leader of cloud infrastructure and has so far done a great job at securing the fabric and communicating the shared responsibility model…However vulnerable resources continue to be deployed in the Cloud and targeted by malware actors as a foothold to move laterally in search for valuable data amongst other things.

Sites like Shodan then index these exposed systems and further expose them to wider audiences and script kiddies.

So you ask, wouldn’t be nice to know if ANY of your assigned internet IPs on AWS are referenced by vulnerability scanners like Shodan?

This write up describes a fully automated way of achieving such oversight by leveraging the Shodan search intelligence to protect potentially misconfigured resources before they get taken over.

This document audience is everyone from security leadership to security teams and is structured as such. The first part provides a solution architecture overview and discusses serverless technologies while the latter part provides the full code and step-by-step deployment of the solution.

Background:

This project was created with the team at asecure.cloud. AsecureCloud provides security assessments for your AWS environment along with recommendations and templates to remediate issues.

In addition to that, asecure.cloud hosts a FREE library of 500+ customizable AWS security configurations and best practices available in Terraform, CloudFormation, and AWS CLI.

Pre-requisites:

NOTE: Tested on Fedora 33 system as the host.

Solution Overview:

Our solution will help users deploy a [1] lambda function that allows users to crawl all the existing Public IPv4 addresses from the following AWS services for all the active regions in the account:

- EC2 Elastic IPs

- Elastic Load Balancing(ELB and ELBv2)

- Amazon Elasticsearch Service

- Amazon Message Broker Service

- Amazon Data Migration Service

- Amazon Relational Database Service

[2] Simultaneously, these IPs will be stored Amazon DynamoDB Table. Once all the IPs are crawled and stored, [3] the lambda function will now scan for the stored IPs and [4] will them against Shodan API for their risk level and accordingly, [5] attributes will be updated in the table.

How does our solution work?

Python Codes:

In snippet 1.1, a list of all the python library imports used across the project.

In snippet 1.2,

- default_region: It is a predefined environment variable provided by AWS. It will give us the region name where our lambda function is executed.

- dynamoDBTableName: This is a user-defined variable that will be set in AWS lambda configuration (Environment Variables).

- clientDynamo: It will create a service client for DynamoDB which will be used to interact with the DynamoDB table.

- clientRegion: It will create a service client for EC2 which will be used to get the active regions list. describe_regions() will list all the active regions with some extra details. The following loop will help us create a list of region names only which will be stored in activeRegionNames variable.

- The following statements are for functions calls for crawling IPs and testing them with Shodan. The above-defined variables will be passed to these functions.

- datetimeToepochtime(dateTime): The time received in response from the boto3 client calls will be passed on to this function and it will return back the epoch time for the same which later will be stored as Time of Scan in the table.

- accountID: Using AWS Security Token Service client, it will fetch the ID of the account where the lambda function is executed.

Moving on to the function get_EIPs(…):

- clientEIP: It will create an EC2 client using which describe_addresses() function will be called which will list all the Elastic IPs in a region with all its attributes out of which we will get our IP and the time of the scan. We will convert this time to epoch time using datetimeToepochtime(…).

- EIPList: List having all the IPs.

- Using a loop, we will push the IP and its metadata to the DynamoDB tables. We will check for the current use status of IP using the association ID. Rest all metadata variables have been fetched previously and stored in variables or will be hardcoded.

- Since it is to be done for all the regions, the code snippet will run in a loop based on the list activeRegionNames.

Similarly, we will fetch the public IPs and their metadata for all other mentioned services and push them to our table.

- http: Since the requests library is not supported by default on lambda so we are using urllib3 instead. Hence, we have created a PoolManager instance to make requests later.

Moving on to the function shodantest(…):

- responseIPs: We will first scan out DynamoDB table with the attribute set as EIP and parse it accordingly to get the list of all the IPs in the table and store it in a variable.

- shodan_key: Since the API key is very important and stored encrypted, So we have to fetch the decrypted key from AWS Systems Manager Parameter Store and store it in a variable.

- Using a loop in the IPs list, we will check all the IPs for their risk and update the existing items in the table accordingly.

Terraform Codes:

Providers

- aws provider: Refer for credential and other configuration.

- archive provider: It will be used to ZIP archive the files to be uploaded to lambda.

Variables

- table_name: DynamoDB table name to be created for storing our data.

- lambda_function_name: The function name to be created.

- shodan_key: Replace with your own Shodan key. Available on your dashboard. P.S.The key you here is just an example key and won’t work.

- aws_dynamodb_table: We will create a DynamoDB table using this resource with EIP as a primary key. Since it is a NoSQL service, so we do not require to create other attributes.

- archive_file: It will create an archive ZIP for the directory containing the python codes that are to be uploaded to lambda.

- aws_ssm_parameter: It will help us upload our API key to the parameter store and encrypt it.

- aws_iam_role: It will create an IAM role for us that can assume the role of lambda service and will be attached to our lambda resource.

- aws_iam_policy: Since we follow the policy of least privilege, we will create an IAM policy with only the required action powers for the IAM role to be attached to the lambda.

- aws_iam_role_policy_attachment: It will help us attach our created IAM policy to the created IAM role.

- aws_lambda_function: It will help us create our Lambda function with the required configuration and attach the role to it.

- Increase timeout if required.

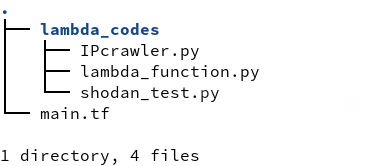

The actual Directory structure of codes:

- lambda_codes: contains python codes to be uploaded to AWS Lambda function.

- main.tf: contains the code that creates the required infrastructure.

Creating the infrastructure:

terraform init

terraform applyInfrastructure created:

Testing our lambda function:

How to destroy the infrastructure (Cleanup):

terraform destroyFor any queries, corrections or suggestions, connect with me on my LinkedIn.

👋 Join FAUN today and receive similar stories each week in your inbox! ️ Get your weekly dose of the must-read tech stories, news, and tutorials.

Follow us on Twitter 🐦 and Facebook 👥 and Instagram 📷 and join our Facebook and Linkedin Groups 💬