KEDA- Kubernetes Native Event Driven Autoscaler

Few month ago, I was reading an article and I came to know about KEDA. KEDA is having capability to become game changer in kubernetes auto scaling world.

KEDA is pure event driven auto scaler and work alongside standard Kubernetes components like HPA (Horizontal Pod AutoScaler). With KEDA you can map your app for autoscaling without any changes.

KEDA has three major components:

KEDA Operator: KEDA Operator works as an agent and have capability to deactivate and activate k8 deployments on the basis of configured events. KEDA Operator runs as separate container in k8 cluster.

KEDA Metrics Server: KEDA metrics server that exposes event data like queue length or stream lag to the Horizontal Pod Autoscaler to drive scale in/out.

Scaler: Scaler object is connected with external sources like Kafka to fetch metrics like topic lag and present it to the metrics server. During ScalerObject deployment you need to provide following trigger source definition.

Please take a look in to pre built scaler objects here

Architecture:

KEDA supports multiple ScalerObject to scale verity of deployment like Kafka, Redis, ActiveMQ, Prometheus, Cloud services etc.

KEDA in action: In Demo, we’ll explore kafka based scaler and then briefly talk about http based scaling.

Prerequisites:

- Docker

2. Minikube

3. Kubectl

4. Kafka local cluster

5. Helm

Minikube installation: Refer https://minikube.sigs.k8s.io/docs/start/

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-darwin-amd64

sudo install minikube-darwin-amd64 /usr/local/bin/minikube

Kubectl installation: Refer https://kubernetes.io/docs/tasks/tools/install-kubectl-macos/

curl -LO “https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl"

Kafka Broker: Single node local cluster

1. Zookeeper will start on default port 2181. You can change port in Zookeeper.Properties file

2. Start local cluster. Kafka broker will start on 9092 default port.

3. Create topic name events.

KEDA installation:

1. helm repo add kedacore https://kedacore.github.io/charts

2. helm repo update

3. kubectl create namespace keda

4. helm install keda kedacore/keda — namespace keda

Verify the installation. Operator and metrics server should be up and running.

Now develop a Kafka consumer app.

Build a docker image

docker build -t processor-app .

Create a deployment file and deploy Kafka message processor app.

kubectl create -f deployment.yaml

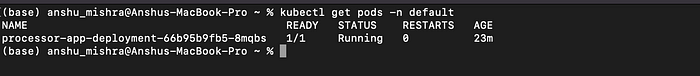

Verify if app is up and running

All set, now we have installed KEDA and deployed our Kafka consumer app.

Now deploy scaler object, which is going to monitor Kafka topic and will generate event in case of any lag.

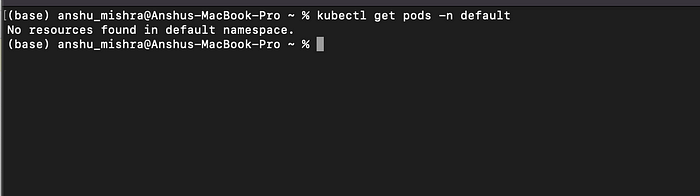

Now see KEDA in action. Since we have defined minReplicationCount as 0, KEDA will scale down pods to zero.

Check, if there is any consumer pod is running. There should be 0 pod because per scaler deployment definition we should have 0 pods.

Start sending message on “events” topic

Check if consumer pod is coming up. Since we have sent some messages on topic, there should be pods up and running, ready to consume messages.

Check logs and see if they have started processing the message or not.

It worked :)

Note: There is only one deployment came up because all of messages are going to single partition. Number of replicas will not exceed the number of topic partition because if there are more number of consumers than the number of partitions in a topic, then extra consumer will have to sit idle.

Http Scaling : Http traffic is tricky to scale. KEDA community is building http scaler object. The KEDA HTTP Add-on allows Kubernetes users to automatically scale their HTTP servers up and down (including to/from zero) based on incoming HTTP traffic. Http deployment can be scale via Prometheus trigger as well. Idea is to install ingress controller and all http traffic should come via ingress controller. We can use Prometheus server to monitor and collect traffic metrics. Now we can use KEDA Prometheus trigger to scale up and down pods on basis of total http incoming request.

Conclusion: KEDA offers an alternative method for scaling to Kubernetes standard method, which is to look at indicators such as CPU load and memory consumption of a container. KEDA is proactive in nature and scaling up according to indicators such as message queue size in event sources like Kafka. KEDA can run on both the cloud and the edge and has no external dependencies. KEDA has lots of build in scaler objects for scaling k8 workload. KEDA can be used to proactively scale AWS EKS workload as well link.

References: